How Soon Will We Make Artificial Brains?

Michael D. Mauk—

Artificial minds are all the rage in books and movies. The popularity of characters such as Commander Data of Star Trek Next Generation reveals how much we enjoy considering the possibility of machines that think. This idea is made even more captivating by real-world artificial intelligence successes such as the victory of the Deep Blue computer over chess champion Garry Kasparov and the computer Watson’s domination of the TV game show Jeopardy. Yet while victories in narrowly defined endeavors like these games are truly impressive, a machine with a humanlike mind seems many years away.

What will be required to span the gap is surprisingly simple: mostly hard work. To date, no fundamental law has emerged that precludes the construction of an artificial mind. Instead, neuroscience research has revealed many of the essential principles of how brain cells work, and large-scale “connectome” projects may soon provide the complete wiring diagram of a human brain at a certain moment in time. Yes, there are many details left to discover, and to realize this goal the speed and capacity of computers must grow well beyond today’s already impressive levels. There is, however, no conceptual barrier—no absence of a great unifying principle—in our way (but see the Nicolelis essay in this volume for a counterview). Here, my goals are to make this claim more concrete and intuitive while showing how research attempting to recreate the processing of brain systems helps advance our understanding of our brains and ourselves.

Pessimism about understanding or mimicking human brains starts with a sense of the brain’s vastness and complexity. Our brains are comprised of around eighty billion neurons interconnected to form an enormous network involving approximately five hundred trillion connections called synapses. As with any computing device, understanding the brain involves characterizing the properties of its main components (neurons), the nature of their connections (synapses), and the pattern of interconnections (wiring diagram). The numbers are indeed staggering, but it is crucial that both neurons and their connections obey rules that are finite and understandable.

The eighty billion neurons of a human brain operate by variations on a simple plan. Each generates electrical impulses that propagate down wirelike axons to synapses, where they trigger chemical signals to the other neurons to which they connect. The essence of a neuron is this: it receives chemical signals from other neurons and then generates its own electrical signals based on rules implemented by its particular physiology. These electrical signals are then converted back into chemical signals at the next synapse in the signaling chain. This means that we can know what a neuron does when we can describe its rules for converting its inputs into outputs—in other words, we could mimic its function with a device that could implement the same sets of rules. Depending on how fine-grained we make categories, there are on the order of hundreds (not tens, not thousands) of types of neurons. So determining a reasonably accurate description of the input-output rules for each type of neuron is not terribly daunting. In fact, a good deal of progress has been made in this regard.

What about the synaptic connections between neurons? There do not appear to be any barriers that can prevent our understanding these structures. The principles governing how they work are increasingly well understood. Synapses contain protein-based micromachines that can convert electrical signals generated by a neuron into the release of miniscule amounts of chemical neurotransmitter substances into the narrow gap between two connected neurons. The binding of neurotransmitter molecules to the receiving neuron nudges the electrical signals in that neuron toward more spiking activity (an excitatory connection) or toward less spiking activity (an inhibitory connection). There remain many details to discover about different types of synapses, but this task is manageable with no huge conceptual or logistical barriers.

When appropriate, our understanding of a synapse type would have to include its ability to persistently change its properties when certain patterns of activity occur. These changes, collectively known as synaptic plasticity, can make a neuron have a stronger or weaker influence on the neurons to which it connects. They mediate learning and memory—our memories are stored by the particular patterns of the strength of the trillions of synapses in our brains. It is important that we need not know every last molecular detail of how plasticity works. To build a proper artificial synapse we simply need to understand the rules that govern its plasticity.

Even with eighty billion neurons then, the number of neuronal types and the number of plasticity rules is finite and understandable. It is an entirely doable task to build artificial versions of each neuron type, whether in a physical device such as a chip or as a software subroutine. In either case, these artificial neurons can receive certain patterns of input across their synthetic synapses and can return the appropriate patterns of output. As long as each artificial neuron produces the correct output for any pattern of inputs its real version might receive, we have the building blocks needed to build an artificial brain.

With the vast complexity of each brain’s wiring diagram it’s easy to imagine there is just too much complexity to understand, even with a manageable number of neuron types. However, there are reasons to believe that the complexity can be tamed. The connections between neurons are not random. Rather, they follow rules that are readily identifiable. For example, in a brain region called the cerebellar cortex, a type of neuron called a Golgi cell receives synapses from many axons called mossy fibers and also from the axons of granule cells. The Golgi cells can also, as it turns out, extend axons and form synapses to inhibit their neighbor Golgi cells (it’s reciprocal too: they are inhibited by their neighbors). With this information we could wire up the Golgi cells of an artificial brain.

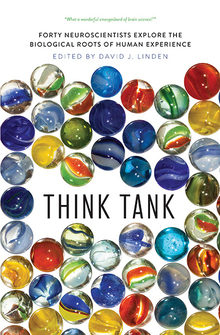

From Think Tank edited by David J. Linden. Published by Yale University Press in 2019. Reproduced with permission.

David J. Linden is professor of neuroscience at the Johns Hopkins University School of Medicine. He is the author of three books: The Accidental Mind, The Compass of Pleasure, and Touch.

Further Reading: